In 2020, in our joint paper with EUROPOL and the United Nations Interregional Crime and Justice Research Institute (UNICRI) outlined the malicious uses of artificial intelligence and also predicted the use and abuse of deepfake technologies by cybercriminals. Indeed, it didn’t take long for our prediction to become reality — at present, we are already observing attacks happening in the wild.

The growing appearance of deepfake attacks is significantly reshaping the threat landscape for organizations, financial institutions, celebrities, political figures, and even ordinary people. The use of deepfakes brings attacks such as business email compromise (BEC) and identity verification bypassing to new levels.

There are several preconditions for and reasons that these attacks have been successful:

- All the technological pillars are in place. The source code for deepfake generation is public and available to anyone willing to use it.

- The number of available images in public is enough for bad actors to create millions of fake identities using deepfake technologies.

- Criminal groups are early adaptors of such technologies and regularly discuss the use of deepfake technologies to increase the effectiveness of existing money laundering and monetization schemes.

- We are seeing trends of deepfake implementation in newer attacks scenarios, such as in social engineering attacks, where deepfakes are a key technological enabler.

Let us examine how this emerging trend has been developing and evolving in recent years.

Stolen identities in deepfake promotional scams

It has become common to see images of famous people used in dubious search engine optimization (SEO) campaigns on news and social media sites. Usually, the advertisements are in some way related to the expertise of the selected celebrity, and they are specifically designed to bait users and get them to select links under the images.

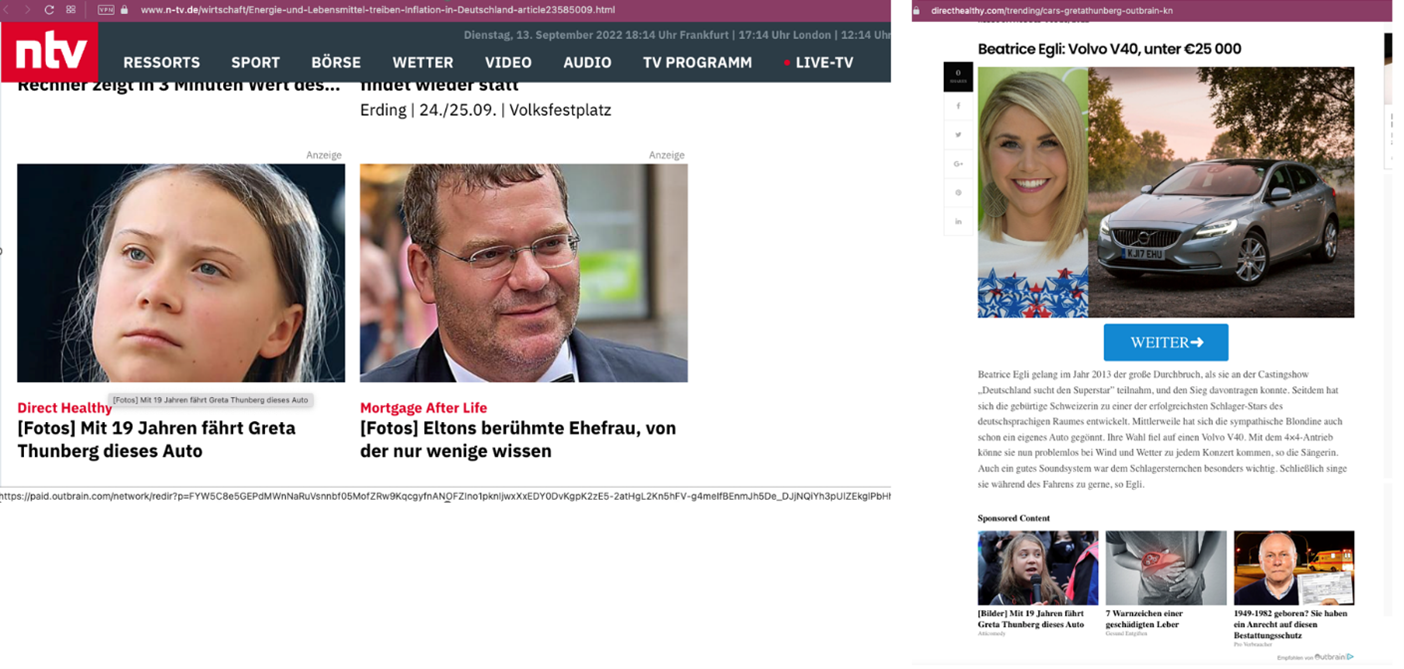

Figure 1, for example, shows a screenshot taken on Sept. 13, 2022 of advertisements on the German news site N-TV. We see that the advertisement features well-known individuals who are likely unaware that their images are being used. If a user selects these ads, a page with an automobile advertisement appears (as seen on the right side of the figure). Selecting a similar advertisement leads to another promotional page (seen in Figure 2).

Unscrupulous advertising groups have been using this type of media content in different monetization schemes for years. However, lately we have seen interesting developments in these advertisements, as well as a change in the technology that enables these campaigns.

Recently, a few digital media and SEO monetization groups have been using publicly shared media content to create deepfake models of famous individuals. These groups use the personas of celebrities and influencers without their consent, distributing the deepfake content for different promotional campaigns.

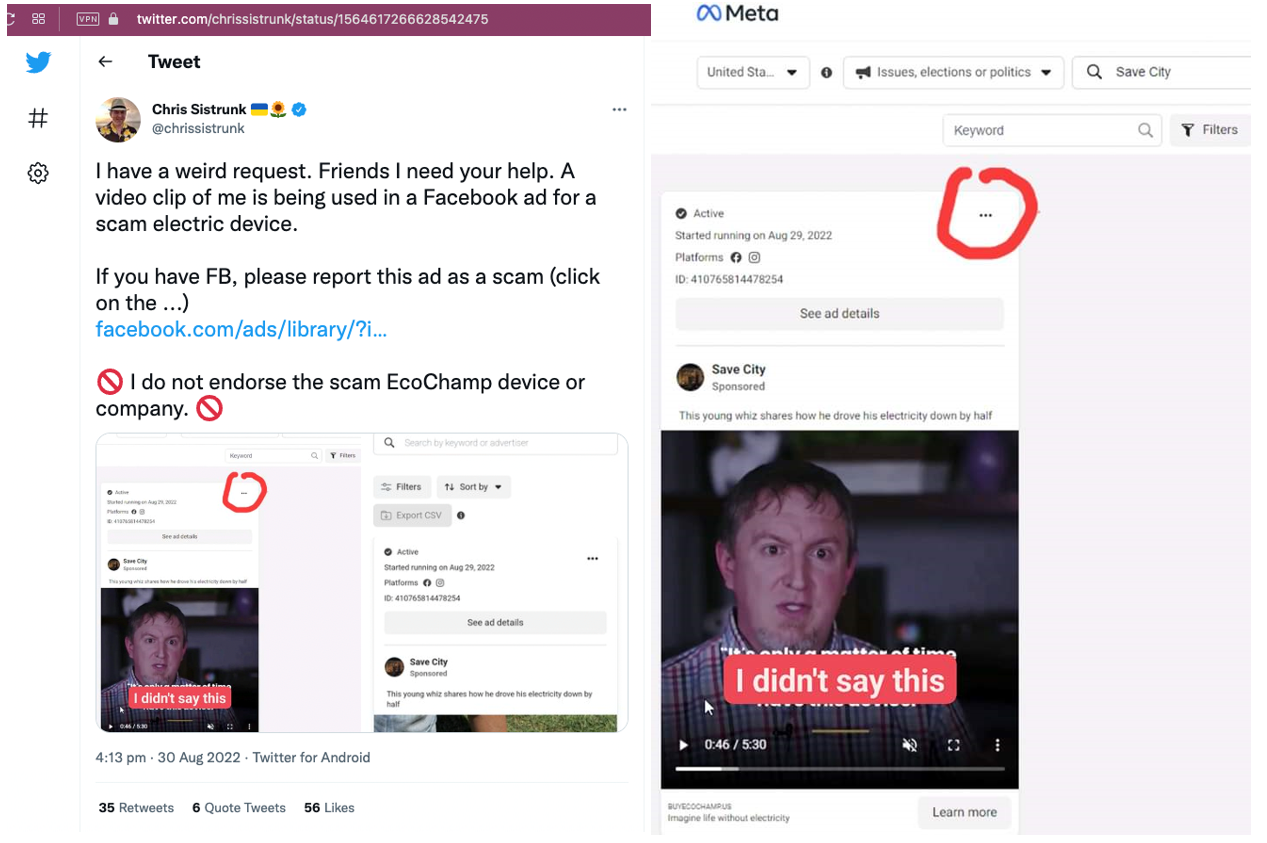

One such example is highlighted in Figure 3. A promotional campaign was seen on Meta featuring Chris Sistrunk, a cybersecurity expert. Notably, he was neither an endorser of the product featured in the campaign, nor did he say any of the content that was in the ad’s video.

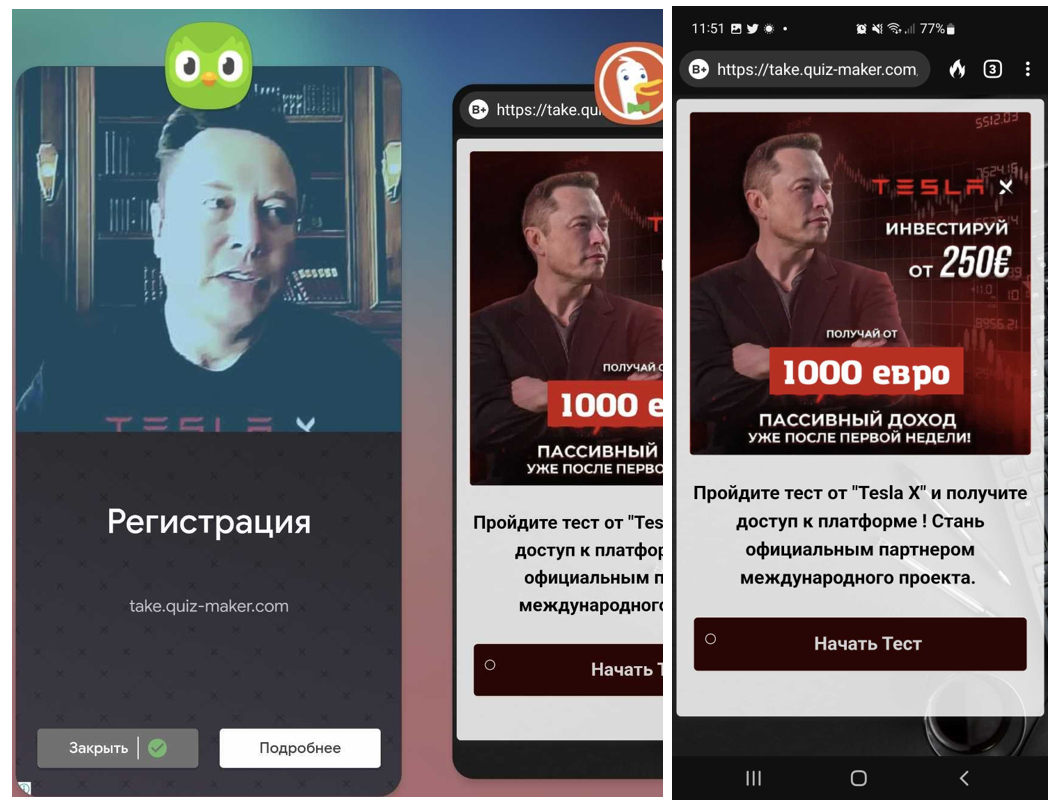

One of the authors of this blog also saw advertisements in legitimate and popular mobile applications which used not just static images but also deepfake videos of Elon Musk to advertise “financial investment opportunities.”

The next escalation for deepfakes is the capability to conduct video calls while impersonating well-known people.

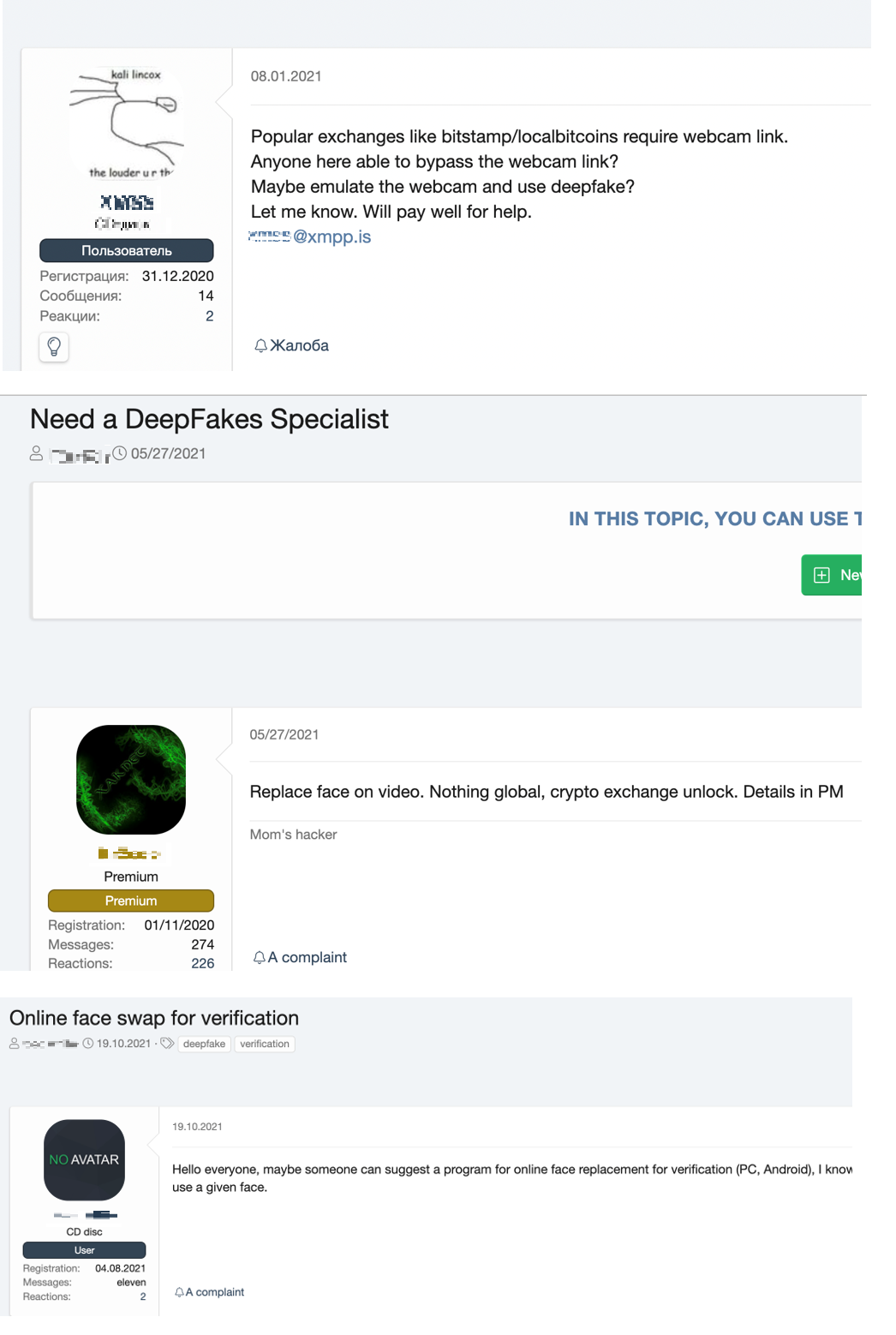

The topic of deepfake services is quite popular on underground forums. In these discussion groups, we see that many users are targeting online banking and digital finance verification. It is likely that criminals interested in these services already possess copies of victims’ identificatory documents, but they also need a video stream of the victims to steal or create accounts. These accounts could be used later for malicious activities like money laundering or illicit financial transactions.

Deepfakes in the underground

Underground criminal attacks using verification tools and techniques have undergone a notable evolution. For example, we see that account verification services have been available for quite a while now. However, as e-commerce evolved using modern technology and online chat systems for identity verification, criminals also evolved their techniques and developed new methods for bypassing these verification schemes.

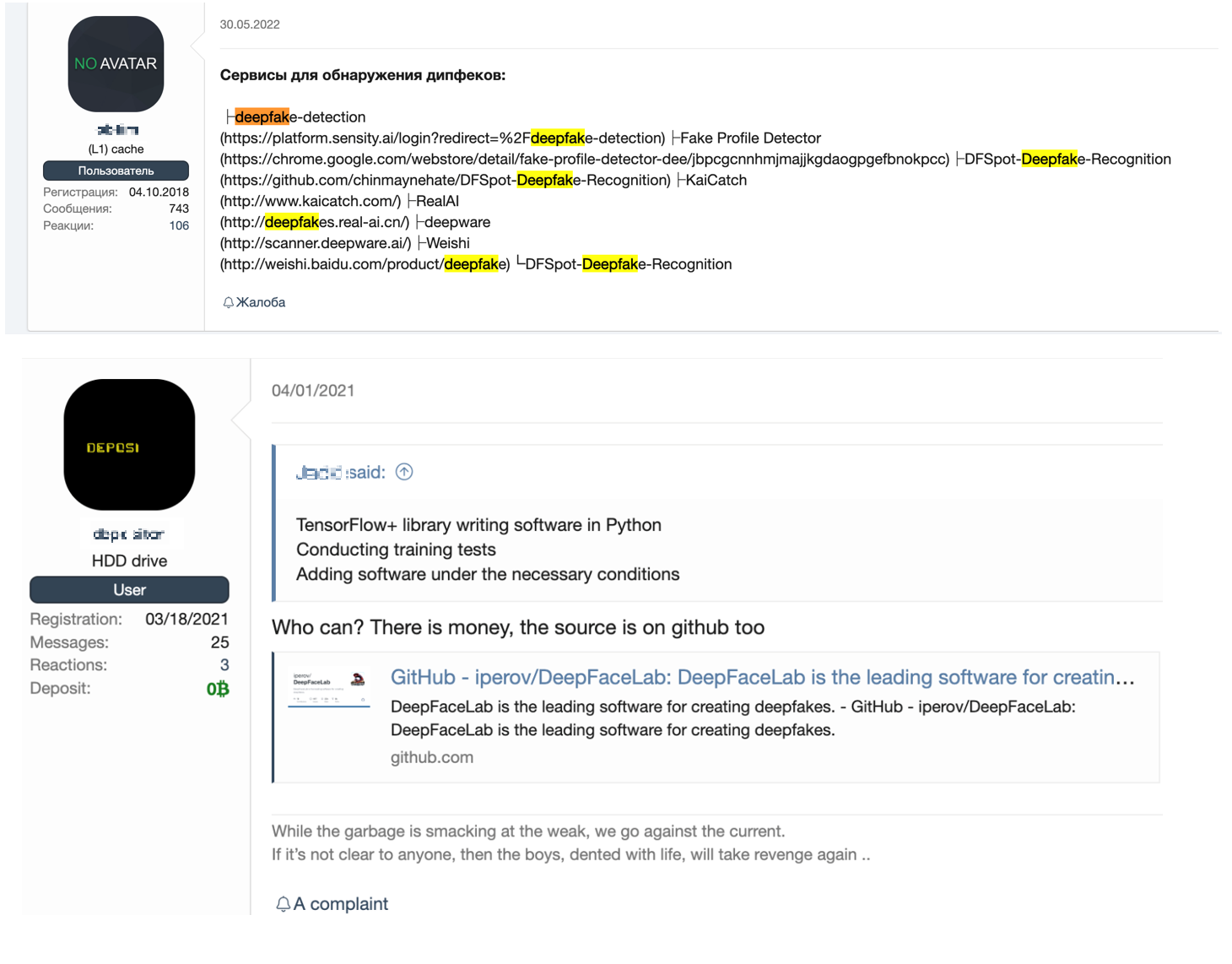

In 2020 and early 2021, we already saw that some underground forum users were searching for “deepfake specialists” for crypto exchange and personal accounts.

In fact, some tools for deepfake production have been available online for a while now, for example on GitHub. We also see that tools for deepfake and deepfake detection have been attracting attention in underground forums.

Recently, a news story was released about a deepfake of a communications executive at cryptocurrency exchange site Binance. The fake was used to trick representatives of cryptocurrency projects in Zoom calls.

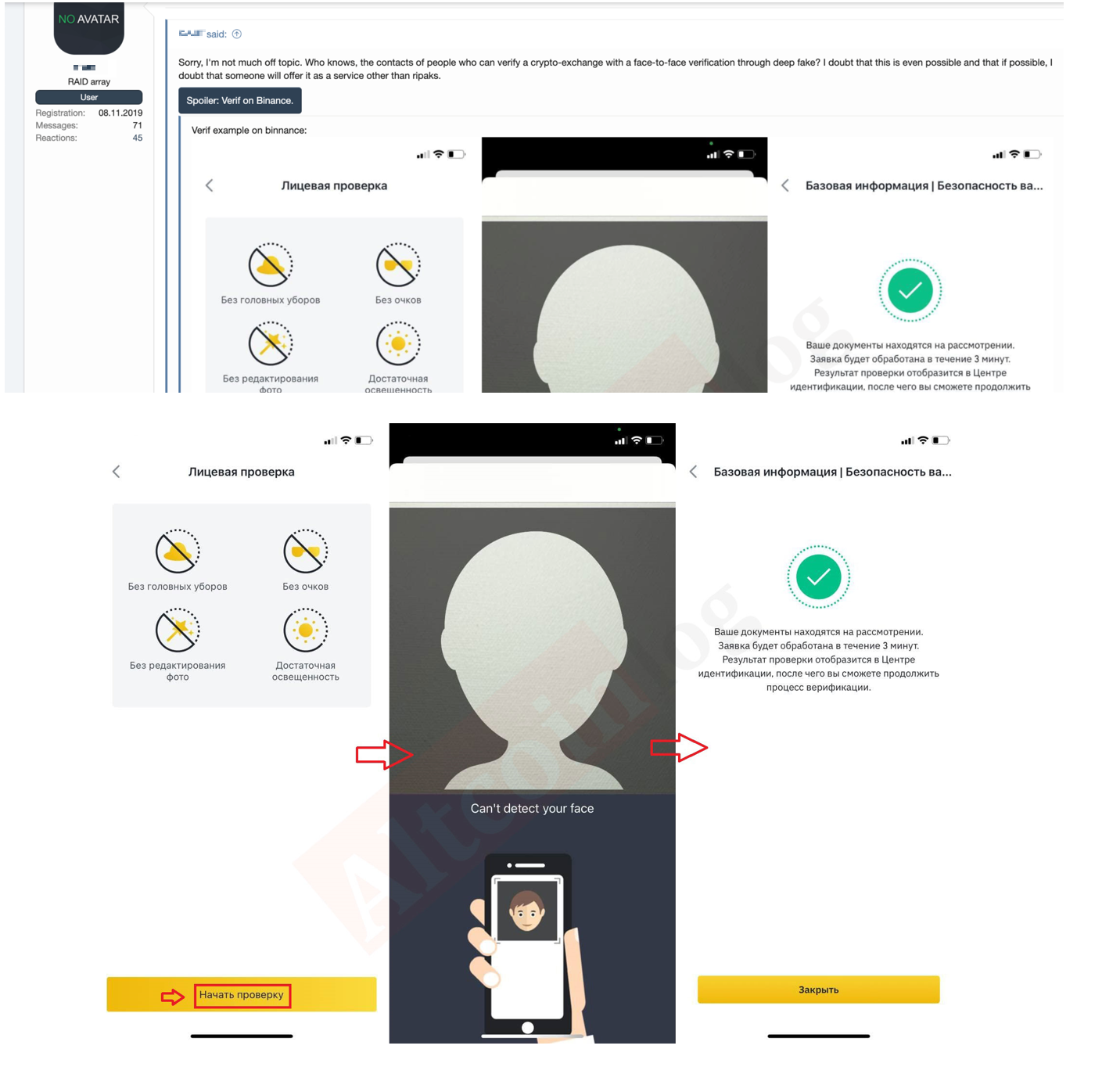

As seen in Figure 7, which shows conversations from the same underground thread in Figure 6, there were discussions on how to bypass Binance verification using deepfakes. Since 2021, users have been trying to find ways to get through Binance’s face-to-face identification.

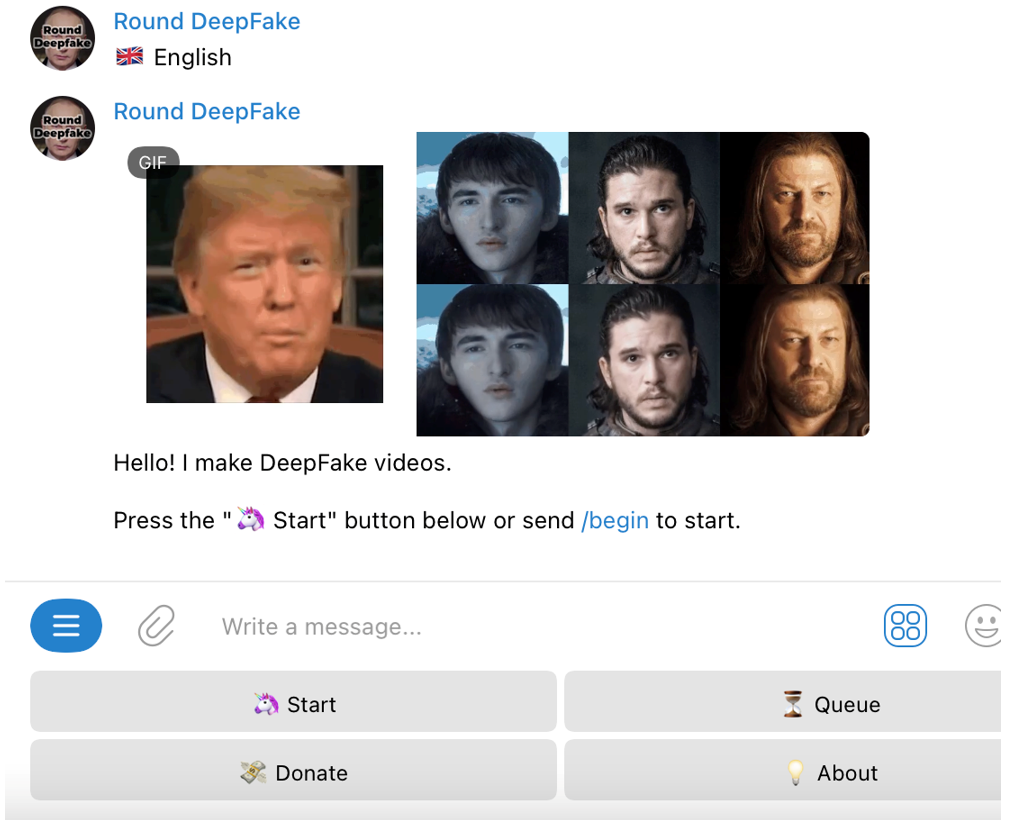

On the tools side, there are also easily used bots that make the process of creating deepfake videos even easier. One example is the Telegram bot, RoundDFbot.

These deepfake videos are already being used to cause problems for public figures. Celebrities, high-ranking government officials, well-known corporate figures, and other people who have many high-resolution images and videos online are the most easily targeted. We see that social engineering scams using their faces and voices are already being proliferated.

Given the tools and available deepfake technology, we can expect to see even more attacks and scams aimed at manipulating victims through voice and video fakes.

How deepfakes can affect existing attacks, scams, and monetization schemes

Deepfakes can be adapted by criminal actors for current malicious activities, and we are already seeing the first wave of these attacks. The following is a list of both existing attacks and attacks that we can expect in the near future:

• Messenger scams. Impersonating a money manager and calling about a money transfer has been a popular scam for years, and now criminals can use deepfakes in video calls. For example, they could impersonate someone and contact their friends and family to request a money transfer or ask for a simple top-up in their phone balance.

• BEC. This attack was already quite successful even without deepfakes. Now attackers can use fake videos in calls, impersonate executives or business partners, and request money transfers.

• Making accounts. Criminals can use deepfakes to bypass identity verification services and create accounts in banks and financial institutions, possibly even government services, on behalf of other people, using copies of stolen identity documents. These criminals can use a victim’s identity and bypass verification process, which is often done through video calls. Such accounts can later be used in money laundering and other malicious activities.

• Hijacking accounts. Criminals can take over accounts that require identification using video calls. They can hijack a financial account and simply withdraw or transfer funds. Some financial institutions require online video verification to have certain features enabled in online banking applications. Obviously, such verifications could be a target of deepfake attacks as well.

• Blackmail. Using deepfake videos, malicious actors can create more powerful extortion and other extortion-related attacks. They can even plant fake evidence created using deepfake technologies.

• Disinformation campaigns. Deepfake videos also create more effective disinformation campaigns and could be used to manipulate public opinion. Certain attacks, like pump-and-dump schemes, rely on messages from well-known persons. Now these messages can be created using deepfake technology. These schemes can certainly have financial, political, and even reputational repercussions.

• Tech support scams. Deepfake actors can use fake identities to social-engineer unsuspecting users into sharing payment credentials or gain access to IT assets.

• Social engineering attacks. Malicious actors can use deepfakes to manipulate friends, families, or colleagues of an impersonated person. Social engineering attacks, like those for which Kevin Mitnick was famous for, can therefore take a new spin.

• Hijacking of internet-of-things (IoT) devices. Devices that use voice or face recognition, like Amazon’s Alexa and many other smartphone brands, will be on the target list of deepfake criminals.

Conclusion and security recommendations

We are already seeing the first wave of criminal and malicious activities using deepfakes. However, it is likely that there will be more serious attacks in the future because of the following issues:

- There is enough content exposed on social media to create deepfake models for millions of people. People in every country, city, village, or particular social group have their social media exposed to the world.

- All the technological pillars are in place. Attack implementation does not require significant investment and attacks can be launched not just by national states and corporations but also by individuals and small criminal groups.

- Actors can already impersonate and steal the identities of politicians, C-level executives, and celebrities. This could significantly increase the success rate of certain attacks such as financial schemes, short-lived disinformation campaigns, public opinion manipulation, and extortion.

- The identities of ordinary people are available to be stolen or recreated from publicly exposed media. Cybercriminals can steal from the impersonated victims or use their identities for malicious activities.

- The modification of deepfake models can lead to a mass appearance of identities of people who never existed. These identities can be used in different fraud schemes. Indicators of such appearances have already been spotted in the wild.

What can individuals and organizations do to address and mitigate the impact of deepfake attacks? We have some recommendations for ordinary users, as well as organizations that use biometric patterns for validation and authentication. Some of these validation methods could also be automated and deployed at large.

- A multi-factor authentication approach should be standard for any authentication of sensitive or critical accounts.

- Organizations should authenticate a user with three basic factors: something that the user has, something that the user knows, and something that the user is. Make sure the “something” items are chosen wisely.

- Personnel awareness training, done with relevant samples, and the know-your- customer (KYC) principle is necessary for financial organizations. Deepfake technology is not perfect, and there are certain red flags that an organization’s staff should look for.

- Social media users should minimize the exposure of high-quality personal images.

- For verification of sensitive accounts (for example bank or corporate profiles), users should prioritize the use of the biometric patterns that are less exposed to the public, like irises and fingerprints.

- Significant policy changes are required to address the problem on a larger scale. These policies should address the use of current and previously exposed biometric data. They must also take into account the state of cybercriminal activities now as well as prepare for the future.

The security implications of deepfake technology and attacks that employ it are real and damaging. As we have demonstrated, it is not only organizations and C-level executives that are potential victims of these attacks but also ordinary individuals. Given the wide availability of the necessary tools and services, these techniques are accessible to less technically sophisticated attackers and groups, meaning that malicious actions could be executed at scale.

Tags

No Comments Yet